About us

Alternate format: Cyber Threats to Canada's Democratic Process: 2025 Update (PDF, 3.69 MB)

The Communications Security Establishment Canada (CSE) is Canada's centre of excellence for cyber operations. As one of Canada's key security and intelligence organizations, CSE protects the computer networks and information of greatest importance to Canada and collects foreign signals intelligence. CSE also provides assistance to federal law enforcement and security organizations in their legally authorized activities when they may need our unique technical capabilities.

CSE protects computer networks and electronic information of importance to the Government of Canada, helping to thwart state-sponsored or criminal cyber threat activity on our systems. In addition, CSE's foreign signals intelligence work supports government decision-making in the fields of national security and foreign policy, providing a better understanding of global events and crises, helping to further Canada's national interest in the world.

Part of CSE is the Canadian Centre for Cyber Security (Cyber Centre), Canada's technical authority on cyber security. The Cyber Centre is the single unified source of expert advice, guidance, services, and support on cyber security for Canadians and Canadian organizations. CSE and the Cyber Centre play an integral role in helping to protect Canada and Canadians against foreign threats, helping to ensure our nation's security, stability, and prosperity. Threats include foreign-based terrorism, foreign espionage, cyber threat activity, kidnapping of Canadians abroad and attacks on our embassies, among others.

Executive summary

Hostile actors are increasingly leveraging artificial intelligence (AI) tools in attempts to interfere in democratic processes, including elections, around the globe. Over the past two years, these tools have become more powerful and easier to use. They now play a pervasive role in political disinformation, as well as the harassment of political figures. They can also be used to enhance hostile actors' capacity to carry out cyber espionage and malicious cyber activities.

This report is an update to the Cyber Threats to Canada's Democratic Process: 2023 Update (TDP 2023). Although the assessments contained in that report remain relevant, the rapid technological advances over the past two years in AI pose a new challenge. Accordingly, this update addresses exclusively threat actors and their use of AI to target democratic processes globally and in Canada. While it is difficult to predict what disinformation or influence campaigns will gain traction, we assess that it is very unlikely (i.e. roughly 10-30% chance) that disinformation, or any AI-enabled cyber activity, would fundamentally undermine the integrity of Canada's democratic processes in the next Canadian general election. As AI technologies continue to advance and cyber adversaries improve their proficiency in using AI, the threat against future Canadian general elections is likely to increase.

Key findings and global trends

- In the last two years, hostile actors have increasingly used generative AI to target global elections, including in Europe, Asia, and in the Americas. While TDP 2023 counted only one case of generative AI being used to target an election between 2021 and 2023, we observed 102 reported cases of generative AI being used to interfere with or influence 41 elections, or 27% of elections, held between 2023 and 2024. These cases involved the use of AI to create disinformation, actively spread disinformation online, and harass politicians. These new developments are driven by improvements in the quality, cost, efficiency, and accessibility of AI technology.

- While we were unable to attribute the majority of the AI -enabled campaigns against global elections to specific actors, our research did identify a high number of threat activities attributed to Russia and the People's Republic of China (PRC). We assess it almost certain that these states, as well as a range of non-state actors, leverage generative AI to spread disinformation narratives, in particular to sow division and distrust within democratic societies. We assess it very likely that Russia and the PRC will continue to be responsible for most of the attributable nation state AI -enabled cyber threat and disinformation activity targeting democratic processes.

- A range of threat actors are using generative AI to pollute the information environment. Of 151 global elections between 2023 and 2024, there were 60 reported AI -generated synthetic disinformation campaigns and 34 known and likely cases of AI -enabled social botnets. The increased use of generative AI marks a change in how disinformation is created and spread but not in the underlying motives and intended effects of disinformation campaigns. We assess it likely that such campaigns will continue to grow in scale as AI technology enabling synthetic disinformation becomes increasingly available.

- We assess it likely that, consistent with non-AI-enabled forms of disinformation, most foreign created AI -generated content does not gain significant visibility in democratic societies. However, information that does gain visibility is usually wittingly or unwittingly amplified by popular domestic and transnational commentators. In addition, foreign actors have displayed an ability to create and spread viral disinformation using generative AI . We assess it likely that, as foreign actors refine their AI -enabled methods, their disinformation will gain greater exposure online. Nonetheless, it remains difficult to predict which piece of disinformation will gain exposure or find resonance online.

- The National Cyber Threat Assessment 2025-2026 ( NCTA 2025-2026) documented that cybercriminals and state-sponsored actors are using generative AI to make social engineering attacks more personal and persuasive. We assess it likely that over the next two years, threat actors will integrate generative AI into social engineering attacks against political and public figures, as well as election management bodies. Although we have not yet observed an actor using generative AI to target elections in this way, we cannot rule out the possibility it has already happened.

- We further assess it likely that, over the next two years, actors targeting Canada will use a range of AI technologies to improve the stealth and efficacy of malware they seek to deploy against target voters, politicians, public figures, and electoral institutions.

- Nation states, in particular the PRC , are undertaking massive data collection campaigns, collecting billions of data points on democratic politicians, public figures, and citizens around the world. Advances in predictive AI allow human analysts to quickly query and analyze these data. We assess it likely that such states are gaining an improved understanding of democratic political environments as a result. By possessing detailed profiles of key targets, social networks, and voter psychographics, threat actors are almost certainly enhancing their capabilities to conduct targeted influence and espionage campaigns.

- Cybercriminals and non-state actors are using generative AI to create deepfake pornography of politicians and public figures—almost all the targets were women. While most cases do not appear to have been part of a deliberate influence campaign, deepfake pornography deters participation in democracy for those targeted. Further, we assess it likely that, on at least one occasion, that content was seeded to deliberately sabotage the campaign of a candidate running for office. We assess that these AI-enabled personal attacks will almost certainly increase given the wide availability of these models.

Key terms

- Machine learning: Methods or models that enable machines to learn how to complete a task from given data without explicitly programming a step-by-step solution.

- Generative AI : A subset of machine learning that generates new content based on patterns extracted from large volumes of training data. Generative AI can create many forms of content including text, images, audio, video, or software code.

- Predictive AI : A subset of machine learning that consumes input data but, rather than producing an image or a text, it discovers patterns in data to classify new data, like object recognition in images or words in speech recognition.

About this report

This report provides an update to TDP 2023, published in December 2023. Given the changes in AI and machine learning technology since then, the report focuses on the threat posed by hostile actors using these technologies to target Canada's democratic process in 2025. The key findings stated in TDP 2023 remain relevant to the present threat environment.

Scope

This report considers AI -enabled cyber threat activity that affects democratic processes globally. Cyber threat activity (e.g. spear phishing, malware) is AI -enabled when it integrates AI components (generative or other machine learning methods) to compromise the security of an information system by altering the confidentiality, integrity, or availability of a system or the information it contains. This assessment also considers AI -enabled influence campaigns, which occur when cyber threat actors use generative AI and predictive AI to research intelligence targets and to covertly manipulate online information.

We discuss a wide range of cyber threats to global and Canadian political and electoral activities, particularly in the context of Canada's next general election, currently set for 2025. Providing threat mitigation advice is outside the scope of this report.

Sources

In producing this report, we relied on reporting from both classified and unclassified sources. CSE's foreign intelligence mandate provides us with valuable insights into adversarial behaviour. Defending the Government of Canada's information systems also provides CSE with a unique perspective to observe trends in the cyber threat environment.

More information

Further resources can be found on the Cyber Centre's cyber security guidance page and on the Get Cyber Safe website.

For more information about cyber tools and the evolving cyber threat landscape, consult the following publications:

- National Cyber Threat Assessment 2025-2026

- An Introduction to the Cyber Threat Environment

- How to identify misinformation, disinformation, and malinformation

Estimative language

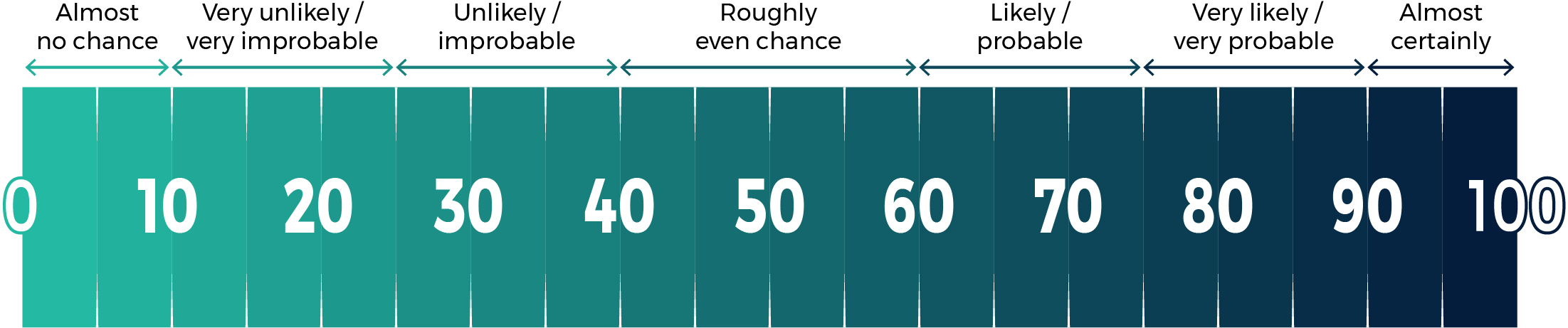

Our judgements are based on an analytical process that includes evaluating the quality of available information, exploring alternative explanations, mitigating biases, and using probabilistic language. We use terms such as "we assess" or "we judge" to convey an analytic assessment. We use qualifiers such as "possibly", "likely", and "very likely" to convey probability according to the chart below.

The contents of this report are based on information available as of January 27, 2025.

The chart below matches estimative language with approximate percentages. These percentages are not derived via statistical analysis, but are based on logic, available information, prior judgements, and methods that increase the accuracy of estimates.

Long description - Estimative language chart

- 1 to 9% Almost no chance

- 10 to 24% Very unlikely/Very Improbable

- 25 to 39% Unlikely/Improbable

- 40 to 59% Roughly even chance

- 60 to 74% Likely/probably

- 75 to 89% Very likely/very probable

- 90 to 99% Almost certainly

Introduction

Compared with earlier versions of Cyber Threats to Canada's Democratic Process, which focused on the broad cyber threat to national elections, this update focuses exclusively on the threat posed by AI . It provides information on how cyber threat actors are using powerful developments in AI , specifically generative AI and predictive AI , to target the electoral process, harm democratic actors, and mislead and disinform voters.

Canadian elections: An attractive target for foreign actors

Foreign threat actors are interested in targeting Canadian elections for a multitude of reasons. Canada is a member of the North Atlantic Treaty Organization (NATO), the Five Eyes (FVEY) intelligence alliance, and is economically and culturally integrated with the United States (US).

As an active player in the international community, Canada participates in key institutions such as the United Nations (UN), Organization for Economic Cooperation and Development (OECD), the World Trade Organization (WTO), the International Monetary Fund (IMF), and the World Bank. As a major economy, Canada is a member of the Comprehensive and Progressive Agreement for Trans-Pacific Partnership (CPTPP), as well as multilateral forums such as the Group of 20 (G20) and the Group of 7 (G7). Government of Canada decisions on matters of military, trade, investment, and migration all affect the global community, as do the products of Canadian culture and science. We assess it almost certain that foreign actors target Canadian elections to influence how these decisions are made, as well to weaken our capacity for decision-making entirely.

AI -enabled cyber threats to Canada's democratic process

The malicious use of AI is a growing threat to Canadian elections, a point first noted in the Cyber Centre's 2019 Update: Cyber Threats to Canada's Democratic Process. Generative AI at that time was expensive and required technical knowledge to use but has since become less costly and more accessible to non-technical users. User-friendly web interfaces, easy prompts, and few regulations or guardrails make it easier for more threat actors to engage in malicious cyber activity.Footnote 1 The speed and quality of output from generative AI models has also markedly improved, for instance from the first Generative Pre-Trained Transformer 1 (GPT-1) to GPT-4 now used for high-quality synthetic content generation.Footnote 2 These and related technologies have enabled adversarial actors to generate persuasive deepfakes and design convincing chatbots capable of spreading disinformation personalized to their target audience. The customization of content to specific targets with generative AI has also been used to enhance phishing attacks and enable new forms of digital harassment, cybercrime, and espionage. Predictive analytics allow data processing at a sophistication and volume unachievable by non-AI enabled methods, allowing human analysts to swiftly identify targets for potential hacking operations or populations to be flooded with targeted propaganda.Footnote 3

The increased accessibility of generative AI compounds the risk to countries like Canada, whose citizens and infrastructure are highly connected. According to DataReportal, 94.3% of Canadians are registered Internet users while 80% of Canadians are active users of social media.Footnote 4 Survey data from Statistics Canada indicate that the majority of Canadians receive their news and information from the Internet or social media, increasing Canadians' exposure to AI -enabled malign influence campaigns.Footnote 5

Although Canada's general elections are conducted by paper ballot, much of the surrounding electoral infrastructure is digitized, including voter registration systems, election websites, and communications between election management bodies and their employees. This creates a threat surface vulnerable to malicious cyber activity aimed at compromising the confidentiality, integrity, or availability of the underlying system before or during an election period. Cyber actors can use generative AI to quickly create targeted and convincing phishing emails, potentially allowing them illicit entry to this infrastructure, where they can install malware or exfiltrate and expose sensitive information.Footnote 6 Canadians, their data, and public and political organizations are all potential targets of AI -enabled influence operations. Virtually every politician, candidate, and media personality has an online presence from which data can be mined and used to create fake content. Canadian political parties hold terabytesFootnote * of politically relevant data about Canadian voters as do commercial data brokers.Footnote 7

PRC state-affiliated actors steal United Kingdom voter registry data

In July 2024, the United Kingdom (UK) government attributed a hack of the UK Electoral Commission to PRC state-affiliated actors. In addition to commission emails, hackers gained access to copies of electoral registries with the names and addresses of anyone registered to vote between 2014 and 2021.Footnote 8 AI -enabled cyber actors can use data such as this to develop propaganda campaigns tailored to specific audiences.

We assess foreign actors are almost certainly attempting to acquire this data, which they can then weaponize against Canadian democratic processes. Cyber actors can combine purchased or stolen data with public data about Canadians to create targeted propaganda campaigns, built on predictive analytics and using AI -generated content.Footnote 9 Malicious cyber actors have also used social botnets to take advantage of social media recommendation algorithms, amplify disinformation narratives, and even engage directly with voters in other countries.Footnote 10 Based on this capacity, we assess that cyber actors can almost certainly target Canadian voters in the same manner.

We assess that countries pursuing adversarial strategies against Canada and our allies almost certainly possess the capabilities illustrated above. We assess that the PRC is likely to employ these capabilities to push narratives favourable to its interests and spread disinformation among Canadian voters. For Russia and Iran, we assess that Canadian elections are almost certainly lower priority targets compared to the US or the UK. We also assess that, if these states do target Canada, they are more likely to use low-effort cyber or influence operations.

Domestic actors, as well as activists and thrill-seekers based abroad, also possess access to off-the-shelf generative AI tools. We assess such actors will almost certainly use these tools to spread disinformation ahead of a national election. We assess that increased geopolitical tensions between Canada and other states are likely to result in cyber threat actors, including non-state actors, using AI -enabled tools to target Canada's democratic process. Ahead of the 2021 general election, for example, known or likely PRC affiliated actors spread non- AI enabled disinformation about politicians running for office, whom they assessed to be anti- PRC .Footnote 11

Changes in AI technology

Generative AI is a type of artificial intelligence that generates new content by modelling features of data from large datasets. Generative AI can create new content in many forms, including text, image, audio, or computer code. Similar to generative AI , predictive AI consumes input data but, rather than producing an image or a text, it applies the patterns it has discovered to make an informed prediction to classify new data. As a result, software can quickly assess large pools of data to identify patterns and perform analysis that would otherwise require time consuming and costly manual annotation by a team of humans. Both types of AI rely on machine learning, which is the process by which machines learn how to complete a task from given data without explicitly programming a step-by-step solution.

Large language models (LLMs)

Large language models (LLMs) are machine learning models that are trained on very large sets of language data using self- and semi-supervised learning. Early language models generated text via next word prediction, but more recent LLMs have significantly built on this function—learning from very large text datasets and sophisticated modelling—so that users can enter prompts on applications such as ChatGPT to output complete sentences or generate entire documents on a given topic, in a given style.Footnote 14

The growing accessibility and diminishing cost of these technologies has enabled their use in cybercrime and in spreading disinformation and attacking democratic infrastructure.Footnote 6 Through either a fake or compromised account, a threat actor can use an LLM to write plausible communication that persuades the target to click a malicious link or inadvertently share their credentials or sensitive information.

LLMs can quickly produce tailored phishing products

To demonstrate the potential threat, a researcher at the University of Oxford used ChatGPT and other LLMs to draft (but not send) personalized spear phishing emails to over 600 members of British Parliament.Footnote 12 Research has shown that LLMs can produce these emails at much faster rates than human researchers and are able to persuade targets to click on malicious links at rates comparable to phishing emails created by humans.Footnote 13

The rise of deepfakes

Deepfakes refer to pictographic, video, and audio content that has been altered or created by a machine learning model. They can be distinguished from "cheap fakes," which are also designed to deceive, but, because they are created with less sophisticated software, are of lower quality and easier to identify.Footnote 16

Although deepfake technology has existed since 2014, it was difficult to use and computationally intensive until the 2021-2022 release of image generation models such as GPT, DALL-E, and Midjourney.Footnote 17

Today, a convincing deepfake can be made from only a few seconds of video or audio, requiring little technical expertise from the user.Footnote 19 Deepfakes are being used against elections globally, primarily to spread disinformation.Footnote 20 A deepfaked voice or video call can also be used by a malicious actor to trick a target into sharing sensitive information. Although we have not yet observed this in the context of an election, cyber criminals have successfully used generative AI in this manner to carry out billions of dollars' worth in fraud.Footnote 21

AI-enabled scammers steal $35 million

In 2024, hackers used a deepfake to impersonate the Chief Financial Officer (CFO) of a company based in Hong Kong. During a video call with a financial worker, they tricked the worker into transferring nearly $35 million (CAD) to the hacker's bank accounts.Footnote 18

Machine learning analytics and the exploitation of big data

Machine learning models are powerful tools for analyzing big data. Master datasets are created by collecting, purchasing, or acquiring huge amounts of data, measured in peta- or exabytes,Footnote ** and require powerful computers to store, query, and analyze. Advances in chip design, software architecture, and computing power have enabled advanced analytics, vastly increasing the speed and accuracy with which big data can be processed.Footnote 22

Machine learning analytics are used by social media companies to identify and promote content assessed as most likely to sustain and generate engagement from a user. Social media platforms have designed their machine learning recommendation algorithms to favour emotionally charged and polarizing content, which can be used to misinform, radicalize, and divide users.Footnote 23

Malicious actors may take advantage of these algorithms to promote their favoured political narratives ahead of elections, while certain platforms themselves have been noted to amplify biased content.Footnote 24

In the hands of adversarial actors, big data can be exploited by machine learning to provide intelligence that enables threat actors to influence targets, including through both human operations and targeted propaganda.Footnote 25 The collation of data, for example, can produce profiles of users—or psychographic information individualized to each voter or voter group, reflecting their attitudes, aspirations, values, and fears.Footnote 26 Likewise, real-time data analytics, capable of collecting and processing data as it is created, enable instantaneous feedback responses and on-demand intelligence reporting.Footnote 27

Russian propaganda agencies purchase targeted advertising to target US federal elections

According to the US Federal Bureau of Investigation (FBI), in fall 2023, Russian propaganda agencies purchased Meta's advertising services, which rely on predictive AI , to direct propaganda towards groups that Russian agencies had assessed as receptive towards given propaganda narratives.Footnote 29

Global trends

The Cyber Centre has analyzed cyber threat activity targeting national level elections since 2015. This update focuses on AI -enabled threats, with data starting from 2023, the year in which our research first indicated that threat actors used generative AI to target a democratic process.

Since 2023, we have observed an increase in the amount of AI -enabled cyber threat activity targeting elections worldwide. We assess that the data almost certainly underestimates the total number of events targeting global democratic processes, as not all cyber activity is reported or detected. Similarly, deepfakes and LLM-generated texts can be difficult to identify or distinguish from human-generated content. Based on our observations from 2023 and 2024, we identified four global trends.

Figure 1: Growth in AI -enabled threats to democratic processes from 2023 to 2024

Long description - Figure 1: Growth in AI -enabled threats to democratic processes

This bar chart shows the increase from 2023 to 2024 in the percentage of elections targeted globally by 3 types of AI -enabled threats:

| Year | Synthetic disinformation campaign | Social botnet campaign | AI -enabled harassment campaign |

|---|---|---|---|

| 2024 | 27% | 16% | 6% |

| 2023 | 14% | 6% | 0% |

Types of AI -enabled threats

Synthetic disinformation campaign: The use of AI to create disinformation to be spread online, pushing a consistent message or theme, or as part of a sporadic and uncoordinated effort to create disinformation about candidates running for office.

Social botnet campaign: Automated botnets, characterized by the use of LLMs to generate content or AI -generated profiles.

AI -enabled harassment campaign: The use of AI to aggressively pressure or intimidate.

Trend 1: Generative AI is polluting the information ecosystem

Between 2023 and 2024, there were 124 national level elections around the globe, as well as the European Union (EU) parliamentary elections in 2024, which took place across the EU's 27 member states. Of these 151 total elections, Cyber Centre research indicates that 40 were targeted by actors using generative AI to create or spread disinformation at least once during the 12 months leading up to the election. Since some countries were targeted multiple times, we identified 60 unique synthetic disinformation campaigns, meaning hostile actors used generative AI to create disinformation to be spread online. These campaigns either pushed a consistent message or theme or were part of a sporadic and uncoordinated effort to create disinformation about candidates running for office. These include cases where AI imagery, audio, or text was used to confuse or disinform voters.

We also detected 36 known or likely cases where automated botnets were used to spread disinformation. These social botnets were often characterized by their use of AI -generated profile pictures, while the bots themselves proved capable of posting links, amplifying content, and interacting with authentic users. On several occasions, researchers and independent watchdogs observed social botnets attempting to manipulate social media recommendation algorithms. Affected platforms included X, Facebook, TikTok, WeChat, Telegram, and country-specific platforms such as Taiwan's PTT.Footnote 28

Figure 2: AI-enabled disinformation campaigns targeting democratic processes

Long description - Figure 2: AI -enabled disinformation campaigns targeting democratic processes

The data for this chart is as follows:

| Year | Synthetic disinformation campaigns | Social botnet campaigns |

|---|---|---|

| 2023 | 7 | 3 |

| 2024 | 53 | 33 |

Trend 2: AI involvement uncertain in phishing against electoral institutions

Between 2023 and 2024, we observed three reported cases where threat actors launched phishing campaigns in attempts to harvest credentials or engage in hack-and-leak operations against political and government organizations.Footnote 30

While we cannot assess that generative AI was used in these cases, we note that the frequency with which adversaries have used LLMs to enhance their phishing attacks in other contexts has rapidly increased over the past two years.Footnote 31 Likewise, over the past two years, AI technology to improve and speed up phishing campaigns has proliferated on the dark web, as have the discovery of new techniques to circumvent safety controls on legitimate technology.Footnote 32 We assess that AI -enabled phishing attacks against democratic targets will almost certainly increase over the next two years.

Trend 3: Advanced targeting based on machine learning analytics

It is difficult to observe in every case how nation states are using machine learning to analyze big data. However, we have observed the PRC and, to a lesser extent, Russia engaging in massive data collection campaigns, typically accomplished through open source acquisition, covert purchase, and theft.Footnote 33 Datasets of interest include information that is expressly political, such as voter registries or campaign data, or specific information that reveals, for example, an individual's shopping habits, health records, and browsing and social media activity.Footnote 34

As assessed in NCTA 2025-2026, well-resourced nation states are very likely relying on AI to process and analyze these datasets, producing information for follow-on intelligence operations, including against elections.Footnote 35 Hostile actors are also using this data to enhance surveillance of, or online operations against, diaspora groups and their political representatives.Footnote 36 Separately, according to an FBI affidavit, Russia covertly used targeted advertising products sold by social media companies and search engines to conduct their propaganda efforts.Footnote 37

Trend 4: Threat actors are using generative AI to harass public figures

Of the 151 elections we assessed from 2023 to 2024, at least 6 had instances where deepfakes were used to harass or intimidate politicians. The deepfakes used in this manner are of an exclusively sexual nature and have primarily targeted women politicians or 2SLGBTQI+ identifying persons in politics. This is consistent with a broader trend concerning AI : deepfake pornography makes up 98% of all deepfake videos online and 99% of those deepfakes target women.Footnote 41

AI is being used in this way to humiliate, intimidate, and exclude targeted persons from political participation. While most efforts do not appear to have been part of deliberate influence campaigns, we assess it likely that, on at least one occasion, content was seeded to deliberately sabotage the campaign of a candidate running for office.

Women disproportionately targeted

In June 2024, British media reported that 400 digitally altered pornographic pictures of more than 30 high-profile women politicians had been found online.Footnote 38 In Greece, AI was used to create a nude image of a party leader, sparking derogatory homophobic comments. Footnote 39 Ahead of Bangladesh's 2024 elections, photographs were shared online falsely depicting a woman politician in a bikini.Footnote 40

AI is being used in this way to humiliate, intimidate, and exclude targeted persons from political participation. While these efforts appear primarily criminal or sadistic in nature, in at least one occasion, we assess it likely that content was seeded to deliberately sabotage the campaign of a candidate running for office.Footnote 42

Main threat actors using AI to target democratic processes

Around 49% of the AI -enabled activity that we observed—all of which involved the spread of disinformation or the harassment of politicians—could not be credibly attributed to a specific actor. From our research, the majority of attributed AI-enabled cyber threat activity emanates from state-sponsored actors with links to Russia, the PRC , and Iran. We assess their goal is almost certainly to break democratic alliances and entrench divisions within and between democratic states while also advancing their geopolitical goals.Footnote 43 We also note that political parties have maliciously used AI within their own countries, typically through the spread of disinformation.

Figure 3: Attributions of threats to democratic processes

Long description - Figure 3: Attributions of threats to democratic processes

This bar chart shows the percentage of AI -enabled threat events by 6 types of threat actors:

- Unattributed: 49%

- Russia/Russia-aligned: 19%

- Domestic actors: 17%

- PRC /PRC-aligned: 11%

- Iran: 2%

- Other: 2%

Russia

Consistent with statistics we gathered in TDP 2023, Russia and pro-Russia non-state actors remain the most aggressive among attributed actors targeting global elections. We assess Russia's cyber threat activity is almost certainly aimed at harming the electoral prospects of parties or candidates that Russia perceives as pro-West in ideology and foreign policy orientation. Over the past two years, at least four prominent Russian networks have used AI to spread disinformation in distinctive ways.

Although we have not definitively observed Russian actors using AI to enhance their phishing or hack-and-leak efforts against elections, we assess it almost certain that they possess this capability. This assessment is based on similar activity undertaken by criminal groups and other nation states.Footnote 44 Likewise, we assess it very likely that Russia has the capability to use AI to improve the efficacy and stealth of malware to deploy against target assets.Footnote 45

With regard to AI -enabled disinformation, a network known as Doppelganger (founded in April 2022) is operated by two Russia-based companies with known links to the Russian state.Footnote 46 Doppelganger relies on AI to spoof legitimate news websites, such as Der Spiegel or The Guardian, while using LLMs to generate articles containing disinformation.Footnote 47 A similar network known as CopyCop uses LLMs to create disinformation articles and deliver them via websites that purport to be news organizations based in western states.Footnote 48 Storm-1679, a third network active since 2023, relies on generative AI to spam media organizations, researchers, and fact checkers with requests for story verification, in an effort to overwhelm their anti-disinformation resources.Footnote 49 Each of these networks has leveraged generative AI to create content as well as social botnets to amplify disinformation across various online mediums.

While the quality of Russian disinformation has varied, Russia and pro-Russia non-state actors have displayed an ability to create bespoke propaganda, designed to enhance its virality and political impact on the target state. In October 2024, Storm-1516 released a tailored deepfake of an individual claiming to have been abused by US vice-presidential candidate Tim Walz.Footnote 50 The attack was a blend of methods, combining disinformation with sexual degradation without concern for the intermediary victim.

US vice-presidential candidate Tim Walz deepfake

The deepfake claimed to have been abused by Walz during Walz's former job as a high school teacher. Although the video was fake, the person in the video appeared to have been an actual student at Walz's school. To create the deepfake, Storm-1516 had likely researched students at Walz's former school, used AI to create a fake video based on their likeness, and then deployed it against Walz.Footnote 50

Despite these efforts, we assess it likely that Russia's campaigns generally do not gain significant visibility without the amplification of witting or unwitting actors from within the targeted state.Footnote 51 According to German intelligence, Russia's Doppelganger campaigns garnered only 800,000 viewsFootnote *** of its 700 fake websites across all its campaigns between November 2023 and August 2024.Footnote 52 Another researcher noted that most of the links shared by Doppelganger received little to zero engagement.Footnote 53 The Tim Walz abuse claim only gained significant attention after it was covered by influential American commentators.Footnote 50 Responsive efforts by the targeted states to remove the online infrastructure supporting these websites as well as deplatforming operations carried out by social media companies have blunted their overall visibility.Footnote 54 Nonetheless, we assess that Russia almost certainly retains the intent and capability to continue using generative AI to pollute the democratic information environment. Recent trends among social media companies to move away from professional fact checking will likely increase user engagement with misleading content.Footnote 55

The People's Republic of China

The PRC poses a sophisticated and pervasive threat in the cyber domain. Using cyber and non-cyber means, the PRC carried out an aggressive malign influence campaign around Taiwan's 2024 presidential election.Footnote 56 With regard to AI , Taiwanese-based researchers identified a likely social botnet composed of over 14,000 accounts across Facebook, X, YouTube, TikTok, and PTT, a Taiwanese social media platform.Footnote 57 The profile avatars for the bots were in some instances created by generative AI , while the bots themselves exhibited coordinated behaviour and similarity in commenting patterns. The accounts echoed narratives pushed by PRC state media and often sought to denigrate the US-Taiwanese relationship and harm the electoral candidacy of Lai Ching-Te, the leader of the Democratic People's Party.Footnote 57 The botnet also shared and amplified content that disparaged the character of various Taiwanese politicians, including the leak of an alleged deepfake sex tape posted to a pornographic website.Footnote 58

Spamouflage Dragon

In 2023, the Spamouflage network spread disinformation targeting dozens of MPs, including the Prime Minister, the leader of the opposition, and several members of Cabinet. The network has also used generative AI to target Mandarin-speaking figures in Canada.Footnote 59

Similarly, Spamouflage Dragon, a probable PRC -driven propaganda campaign that has targeted Canada in the past, has used generative AI to create disinformation to influence foreign voters ahead of democratic elections internationally.Footnote 60

Although these efforts did not garner much attention, independent research organizations have noted that the PRC is refining its propaganda efforts, which are starting to gain more engagement from authentic citizens in the targeted electorate.Footnote 61

As stated earlier, the PRC conducts massive data collection operations against Western populations. Although these data serve various purposes, we assess it likely that the PRC has both the ability and intent to use machine learning to analyze these data to produce detailed intelligence profiles of potential targets connected to democratic processes.Footnote 62 These include voters, politicians, members of the media, public servants, and activists.Footnote 63 Working in cooperation with PRC -based technology companies, the PRC uses this data to aid intelligence work, including to:

- inform decision-making

- identify recruitment opportunities

- enhance influence operationsFootnote 64

We assess it almost certain that the PRC will continue to harvest politically relevant information from Western societies.

We assess it likely that the PRC has leveraged TikTok, a social media platform owned by the PRC -based company ByteDance, to promote pro- PRC narratives in democratic states and to censor anti- PRC narratives.Footnote 66 According to the Network Contagion Research Institute, the PRC "is deploying algorithmic manipulation in combination with prolific information operations to impact user beliefs and behaviours on a massive scale."Footnote 67 We assess it likely that these operations have, on at least one occasion, targeted voters ahead of an election.Footnote 68

Iran

According to the FBI, in 2024, the Islamic Revolutionary Guard Corps (IRGC) used spear phishing to hack into one US presidential campaign and attempt to hack into the campaign of a second candidate.Footnote 69 It remains unclear whether the IRGC used AI in this case. However, the IRGC has been observed in other cases using LLMs to generate targeted and convincing emails inciting their target to click a link (or open an attachment) to navigate to a malicious webpage or download malware.Footnote 70

We assess it very likely that a hostile actor like the IRGC could integrate AI into a similar cyber attack against election infrastructure. The IRGC has also spoofed login pages to harvest the credentials of their victims, a task which, like phishing, can be enhanced by AI technologies.Footnote 71 It is also likely that the IRGC has used LLMs to improve their malware code, disable antivirus software, and evade detection.Footnote 72

IRGC hack of US presidential campaign

During a 2024 hack of a US presidential campaign, the IRGC exfiltrated sensitive information and attempted to share it with the media and individuals that IRGC believed to be associated with rival campaigns. The media and rival campaigns rebuffed these efforts, reporting them to law enforcement, which minimized the effects of the operation.Footnote 65 Although it is unclear if AI was used by the IRGC in this case, the IRGC has been known to use LLMs in similar activities.

Cybercriminals and non-state actors

Cybercriminals and non-state actors are almost certainly responsible for the vast majority of non-consensual deepfake pornography targeting politicians, public figures, and people in the media. Cybercriminals also prolifically conduct hack-and-leak operations against commercial and public databases, including in democratic states.Footnote 73 While the Cyber Centre defines cybercrime as financially motivated cyber threat activity, nation states are known buyers of stolen data.Footnote 74 Stolen data can be used for various purposes, and we assess it likely that some of this data is used by nation states to enhance their AI - and machine learning-enabled operations against democratic processes.

Cybercriminals are also known to take advantage of events with high media coverage, such as an election, as an opportunity to commit scams and fraud against voters.Footnote 75 We assess it very likely that, over the next two years, cybercriminals will use deepfakes and AI -enabled phishing to deploy a range of cyber attacks against democratic processes. These include more disruptive forms of cybercrime like ransomware.Footnote 76

Non-state actors or domestic influencers may wittingly or unwittingly amplify AI -enabled foreign disinformation. Given that such actors typically form more connected and trusted links within domestic social networks, their impact on the amplification of disinformation is larger than that of regular users. As noted earlier, attempts by Russia-linked actors to seed salacious stories about Tim Walz failed to generate much attention until key American influencers engaged with and amplified the content from their platforms.Footnote 50

Implications for Canadian elections

We assess that the PRC , Russia, and Iran will very likely use AI -enabled tools to attempt to interfere with Canada's democratic process before and during the 2025 election. We assess it likely that cybercriminals will take advantage of election-related opportunities in Canada to conduct scams and fraud, without being uniquely focused on exploiting Canadian elections.

When targeting Canadian elections, threat actors are most likely to use generative AI as a means of creating and spreading disinformation, designed to sow division among Canadians and push narratives conducive to the interests of foreign states. We assess it very likely that PRC -affiliated actors will continue to specifically target Chinese-diaspora communities in Canada, pushing narratives favourable to PRC interests on social media platforms.Footnote 77 Since Canadians share a common information ecosystem with the US, Canadians have already been exposed to AI -enabled disinformation targeting US citizens.Footnote 78 It is almost certain that this trend will continue. However, the extent to which any given piece of disinformation will gain visibility or resonance among Canadians is unpredictable.

Canadian politicians and political parties are likely to be targeted by threat actors seeking to conduct hack-and-leak operations. As we have observed in other contexts, we assess it likely that threat actors will leverage LLMs to engage with targets as part of an extended phishing operation. However, we assess it very unlikely that hostile actors will carry out a destructive cyber attack against election infrastructure, such as attempting to paralyze telecommunications systems on election day, outside of imminent or direct armed conflict.

Finally, Canadian public figures, especially women and members of the 2SLGBTQI+ community, are at heightened risk of being targeted by deepfake pornography. Without updated legal and regulatory guidelines, we assess it very likely that the spread of this content will continue unabated.

Looking ahead

Cyber threat activity continues to be used to target democratic processes globally. The Cyber Centre, as part of CSE, produces advice and guidance to help inform Canadians about the cyber threats to Canada's elections.

We provide cyber security advice and guidance to all major political parties, in part through publications such as the Cyber Security Guide for Campaign Teams and Cyber Security Advice for Political Candidates. Representatives from CSE form part of Canada's Security and Intelligence Threats to Elections (SITE) task force.

We work closely with Elections Canada to protect its infrastructure and defend our elections from cyber threats. CSE is authorized by the Minister of National Defence to conduct defensive cyber operations (DCO) to protect the Government of Canada, including Elections Canada. This authorization allows CSE to disrupt malicious cyber activities against those systems. CSE is also authorized to protect systems of importance to the government, such as those related to a general election.

Additionally, the Cyber Centre's sensors program helps defend Elections Canada's infrastructure by monitoring and mitigating potential cyber threats. We also provide expert advice through publications like Security Considerations for Electronic Poll Book Systems and Cyber Security Guidance for Elections Authorities to help electoral bodies enhance their cyber security measures.

To further protect our democratic institutions, the Privy Council Office has published resources for how to combat disinformation and foreign interference. These include toolkits for community leaders, elected officials, public office holders, and public servants.

We encourage Canadians to consult the following resources related to the themes in this assessment:

- Cyber Security Guidance on Generative Artificial Intelligence (AI)

- Guide on Security Considerations When Using Social Media in Your Organization

- Cyber Security Guidance on Identifying and Countering Online Disinformation

- Guidance on Using Social Media Safely

- National Cyber Threat Assessment 2025-2026

- How to Identify Misinformation, Disinformation, and Malinformation

- Fact Sheet for Canadian Voters

CSE's Get Cyber Safe campaign continues to publish relevant advice and guidance throughout the year to inform Canadians about cyber security and the steps they can take to protect themselves online.